Big data analytics system (Apache Spark)

The Apache Spark deployment pattern aims to bring spatial analytics to a familiar environment for data scientists using a Spark library, allowing them to add value through spatial functions and processes in new or existing analytics workflows. Apache Spark provides distributed compute capabilities that support access to a broad range of datasets, a robust library set of capabilities, the ability to explore and interact with structured analytics, and the ability to produce results that can be utilized by a stakeholder or downstream business process.

The Esri-provided Spark library that powers the big data analytics system pattern on Apache Spark is ArcGIS GeoAnalytics Engine. ArcGIS GeoAnalytics Engine uses Apache Spark to access over 150 cloud-native geoanalytics tools and functions for understanding trends, patterns, and correlations. ArcGIS GeoAnalytics Engine can be installed on a personal computer or a standalone Spark cluster, though most organizations tend to utilize managed Spark services in the cloud. These cloud managed environments simplify big data processing, achieve scalability, optimize costs, leverage advanced analytic capabilities, ensure security and compliance, and benefit from managed services and support provided by cloud providers. These include Amazon EMR, Databricks, Azure Synapse Analytics, Google Cloud Dataproc, and Microsoft Fabric.

In addition to ArcGIS GeoAnalytics Engine, Esri offers an alternative Spark library for spatial big data analytics called the Big Data Toolkit. The Big Data Toolkit (BDT) is an Esri Professional Services solution that offers some unique capabilities and may be appropriate in certain scenarios that have requirements to extend spatial functions and tools with enhanced capabilities. See extended capabilities for more information.

Related resources:

- ArcGIS GeoAnalytics Engine

- ArcGIS GeoAnalytics Engine Install & Setup

- Esri Big Data Toolkit (BDT)

- Apache Spark

Base architecture

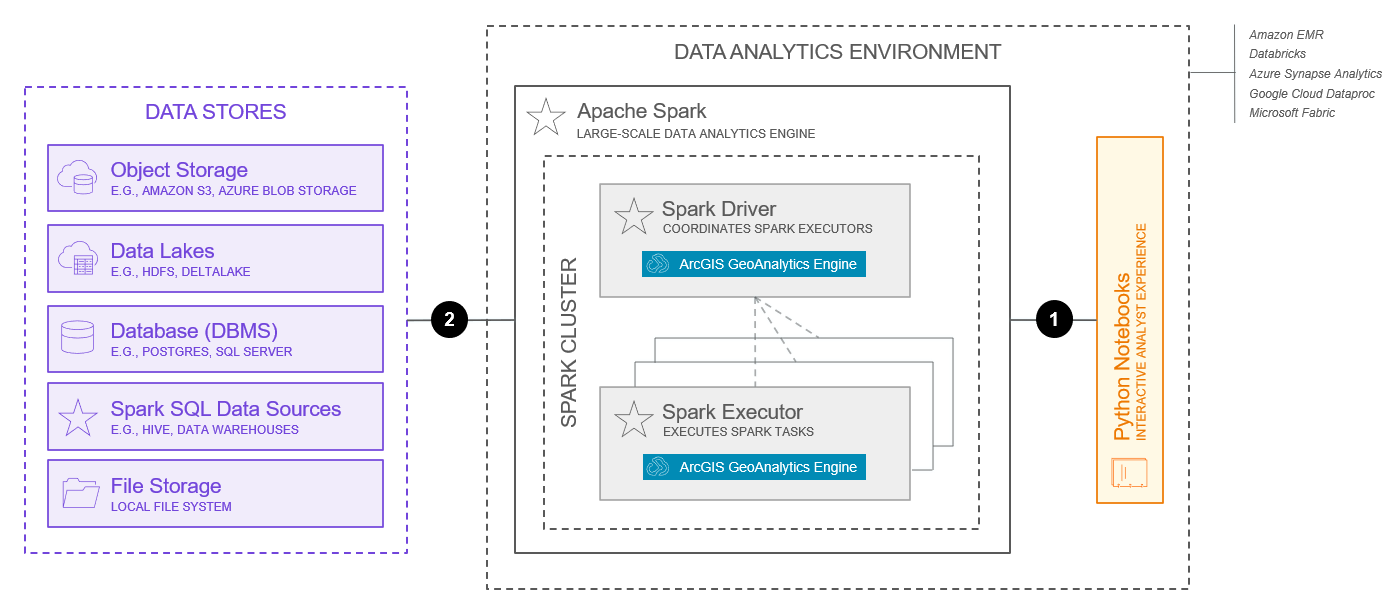

The following is a typical base architecture for a big data analytics system using Apache Spark.

This diagram should not be taken as is and used as the design for your system. There are many important factors and design choices that should be considered when designing your system. See the using system patterns topic for more information. Additionally, the diagram depicted below delivers only the base capabilities of the system; additional system components may be required when delivering extended capabilities.

The capabilities represented above reflect those available as of December, 2025.

Key components of this architecture include:

- The foundation of the big data analytics system is an Apache Spark environment. The Spark environment contains clusters of nodes that run distributed analysis tasks. ArcGIS GeoAnalytics Engine can run on a number of Spark environments, including local deployments, cluster deployments, and managed Spark services in the cloud. Supported managed Spark services include Amazon EMR, Azure Synapse Analytics, Google Cloud Dataproc, Databricks, and as Microsoft Fabric. These options provide a deployment path for organizations that are already running workloads in an existing cloud environment, including Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure cloud providers.

- ArcGIS GeoAnalytics Engine is a Spark plugin and set of functions and tools that run on the nodes within an Apache Spark cluster. Spatial analysis tasks are sent from the Spark driver to Spark executors, where the analysis work is performed. The Spark executors are managed by a Spark cluster manager. ArcGIS GeoAnalytics Engine supports Standalone, Apache Mesos, Hadoop YARN, and Kubernetes cluster managers. Learn more about ArcGIS GeoAnalytics Engine Spark cluster mode.

- GeoAnalytics Engine supports loading and saving data from some common spatial data sources in addition to the data sources supported by Spark. These include, but are not limited to data in file stores, object stores, data lakes, and databases (DBMS). Learn more about supported data stores and supported data sources.

- GeoAnalytics Engine extends PySpark, the Python interface for Spark, and uses Spark DataFrames along with custom geometry data types to represent spatial data. GeoAnalytics Engine comes with several DataFrame extensions for reading from spatial data sources like shapefiles and feature services, in addition to any data source that PySpark supports. GeoAnalytics Engine also includes two core modules for manipulating DataFrames: SQL functions and tools. Users typically interact with the big data analytics system through Python notebooks running Python from within their data analytics environment. Learn more about getting started with ArcGIS GeoAnalytics Engine.

Key interactions in this architecture include:

- PySpark Python code, typically developed in a Python notebook running within the data analytics environment, is bundled as an application and submitted to the Spark cluster. Submitting the job to the Spark cluster is typically performed over TCP. Learn more about submitting Spark applications.

- Communication between ArcGIS GeoAnalytics Engine and data stores is bidirectional, allowing data to be both accessed and saved from a number of supported data stores. The technical specifications of that communication will depend on the type of data store being used. Please see ArcGIS GeoAnalytics Engine data storage for more information.

Additional information on interaction between ArcGIS GeoAnalytics Engine and the Apache Spark environment can be found in the ArcGIS GeoAnalytics Engine product documentation.

Capabilities

The capabilities of the big data analytics system on Apache Spark are described below.

Base Capabilities

Base capabilities represent the most common capabilities delivered by big data analytics systems and that are enabled by the base architecture presented above.

- Data ingest enables data to be accessed from a number of supported data stores when performing analysis tasks.

- Spatial joins and relationships enables rows from two DataFrames to be combined based on a spatial relationship. GeoAnalytics Engine offers multiple mechanisms to spatially join data, as well as analyze and understand the spatial relationships between geometries. Learn more about spatial joins and spatial relationships.

- Time steps and temporal relationships enable analysis using time. Time steps slice input data into steps on which analysis is performed independently, where temporal relationships are used to join data temporally using the spatiotemporal join tool. Learn more about time stepping and temporal relationships.

- Pattern analysis identifies spatial and temporal patterns in data. ArcGIS GeoAnalytics Engine includes a number of tools and SQL functions for pattern analysis, including detect incidents, find hot spots, find similar locations, and geographic weighted regression.

- Proximity analysis looks at the proximity of spatial data to other spatial data. ArcGIS GeoAnalytics Engine includes a number of tools and SQL functions for proximity analysis, including find point clusters, group by proximity, and nearest neighbors.

- Summarization analysis aggregates or summarizes data into higher order data structures. ArcGIS GeoAnalytics Engine includes a number of tools and SQL functions for summarization analysis, including aggregate points, calculate density, and summarize within

- Track analysis works with time enabled points correlated to moving objects. ArcGIS GeoAnalytics Engine includes a number of tools and SQL functions for track analysis, including reconstruct tracks, calculate motion statistics, and snap tracks.

- Geocoding converts text to an address and a location. ArcGIS GeoAnalytics Engine includes a geocoding tool that works with addresses in Spark DataFrames.

- Network analysis helps solve common network problems, often (but not always) for street networks. ArcGIS GeoAnalytics Engine includes a number of tools and SQL functions for network analysis, including create service areas, find closest facilities, and generate OD matrix.

- Data management supports operating on geometries and other fields in big data. ArcGIS GeoAnalytics Engine includes a number of tools and SQL functions for data management, including the calculate field tool and many spatial SQL functions that extend the Spark SQL API.

- Mapping and visualization of analysis results is a powerful step to provide context and help uncover patterns, trends, and relationships. Visualizing and mapping is analogous to charting and plotting with non-spatial data. It’s a way to verify your analysis, iterate, and create shareable and engaging results. ArcGIS GeoAnalyics Engine enables you to visualize datasets in Python notebooks. Learn more about visualizing results of your analysis.

Extended capabilities

Extended capabilities are typically added to meet specific needs or support industry specific data models and solutions, and may require additional software components or architectural considerations.

- Raster analysis supports analytic functions and processors working against raster data. ArcGIS GeoAnalytics Engine does not yet support raster analysis; however, Esri Big Data Toolkit does provide some basic raster analysis processors that combine raster and vector data such as erase raster and tabulate area. For more advanced raster and imagery analysis please see the imagery data management and analytics system pattern.

- Custom analysis tools are not yet supported with ArcGIS GeoAnalytics Engine, but can be developed using the Esri Big Data Toolkit (BDT). BDT can be extended by modifying or creating analytic tools and functions.

- Data publishing and hosting of analysis results is supported by ArcGIS but is considered outside of the scope of the big data analytics system. Publishing and sharing is most commonly addressed by a self-service mapping, analysis, and sharing system; however, many of the ArcGIS system patterns support publishing and sharing of analysis results. Learn more about using, integrating, and composing system patterns.

Considerations

The considerations below apply the pillars of the ArcGIS Well-Architected Framework to the big data analytics system on Apache Spark. The information presented here is not meant to be exhaustive, but rather highlights key considerations for designing and/or implementing this specific combination of system and deployment pattern. Learn more about the architecture pillars of the ArcGIS Well-Architected Framework.

Reliability

Reliability ensures your system provides the level of service required by the business, as well as your customers and stakeholders. For more information, see the reliability pillar overview.

- Reliability is almost entirely handled by the Apache Spark environment. There are many factors to consider, such as the selection of a cluster manager, which will also vary significantly, based on where Spark is installed (local, cluster, or managed cloud service).

- SLAs are less common with big data analytics systems than with other systems.

Security

Security protects your systems and information. For more information, see the security pillar overview.

- Authorization to use a big data analytics system is supported using username and password or a license file provided by Esri.

- Authentication, encryption, logging, and other security considerations are typically handled outside of the ArcGIS GeoAnalytics Engine software, either at data sources or in the Apache Spark environment. Learn more about Spark security.

Performance & scalability

Performance and scalability aim to optimize the overall experience users have with the system, as well as ensure the system scales to meet evolving workload demands. For more information, see the performance and scalability pillar overview.

- The advantage of utilizing Apache Spark based environments is its distributed compute capabilities. Many organizations have cloud managed environment administrators that understand the concepts of controlling the scale of the compute resources utilized by clusters and managed analytics. There is nothing inherently different concerning spatial big data analytics. There is a cost associated with performing analytics at scale. Organizations should conduct a cost benefit analysis to determine the compute they wish to assign to spatial analytics.

Learn more about tuning Spark.

Automation

Automation aims to reduce effort spent on manual deployment and operational tasks, leading to increased operational efficiency as well as reduction in human introduced system anomalies. For more information, see the automation pillar overview.

- Analysis is often iterative, requiring human review and intervention between analysis executions. However, there are situations in which analysis execution may need to be automated and/or scheduled.

- Cloud managed Apache Spark environments, which provide additional automation options and can help streamline overall operations and use, are very commonly used.

- As the main application or interface into the big data analytics system is Python, and Apache Spark provides a script for submitting applications to the cluster, automation of analysis submissions is straightforward and flexible.

- Spark cluster managers also support scheduling jobs across applications. There are different options for cluster managers and managed Spark services in the cloud, many of which provide different capabilities.

Integration

Integration connects this system with other systems for delivering enterprise services and amplifying organizational productivity. For more information, see the integration pillar overview.

- Integration is somewhat inherent to the big data analytics system pattern, with integration taking place at the data tier, as well as with ArcGIS GeoAnalytics Engine and the Apache Spark environment or managed Spark service in the cloud.

- Integration with a self-service mapping, analysis, and sharing system, or other ArcGIS system patterns, is also common for publishing and sharing of analysis results with other users and systems in the enterprise. Learn more about using, integrating, and composing system patterns.

Observability

Observability provides visibility into the system, enabling operations staff and other technical roles to keep the system running in a healthy, steady state. For more information see the observability pillar overview.

- Observability of a big data management system on Apache Spark typically centers around understanding use and performance of underlying infrastructure as well as gaining visibility into the status and progress of large, batch analytic jobs. Approaches to monitoring the use and performance of underlying infrastructure will depend on the Apache Spark environment being used. Cloud managed environments are popular in part due to their strengths in area of observability. Monitoring of Apache Spark jobs is provided by Apache Spark.

Other

Additional considerations for designing and implementing a big data analytics system on Apache Spark include:

- Successful operation requires a strong understanding of, and commitment, to Apache Spark. Strong understanding of big, distributed data and analytics concepts are also required.

Related resources: